Survey123 is a great option for deploying field data collection, especially when it must be done fast. It would behoove the survey designer, however, to consider the ways in which the purpose and function of the survey might evolve during the collection process. In a perfect world we would take ample time to design and test before deployment. This would allow limitations and problems to shake out. But when the luxury of time is not available some considerations in the following survey scenario might help you out.

You are in a pinch because emergency work must be tracked and managed. You are given the task of designing, deploying, and managing a Survey123 survey to allow users in the field to capture information for managers in the office. Rapid turnaround is necessary, so you open up Survey123 Connect and start cracking.

One consideration that might make your life easier is in the choice of field names. The ‘name’ column in the spreadsheet controlling the survey in Survey123 Connect will determine the field names of the survey table upon publishing the survey to ArcGIS Online. These names have a high likelihood of becoming visible as the table gets queried, downloaded, and spreadsheet-ified. In a perfect world we would control the process, giving users moderated access through deployment of apps and maps and scripting the complicated, routine data manipulation. But in an imperfect world, working under the gun, ad-hoc requests for an excel file will scratch the itch. Therefore, think about the field names and remember plain-English meanings go a long way.

Another overlooked feature you should include in this kind of survey is a clear ‘complete’ condition. ‘Complete’ means different things to different people. Is the survey complete? Is the work complete? Is the inspection complete? Do you need multiple complete conditions to provide a report of the current stage of ‘complete’ the zone is in? Can there be a partial complete? When surveys get complex it becomes harder and harder to answer the question “Is it complete?” Try to think this through beforehand instead of scraping the resulting survey table for answers after the fact.

Try to predict what would constitute ‘Key Performance Indicators’ in your survey and structure your select lists to make them easy to consume for future Dashboard applications. Where possible, design your select list items as mutually exclusive conditions. We want to know if a tag is yellow or red or green. We want to be able to assume if a tag is not red and is not green, it is yellow. This will make charts and count filters that much easier in Dashboard applications.

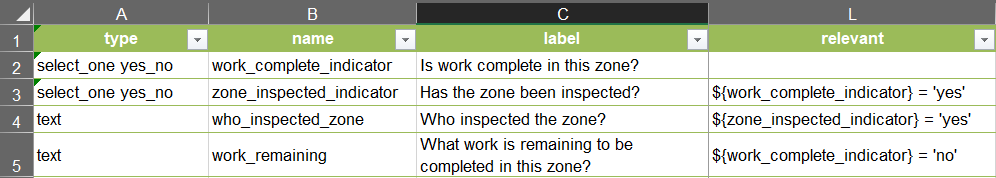

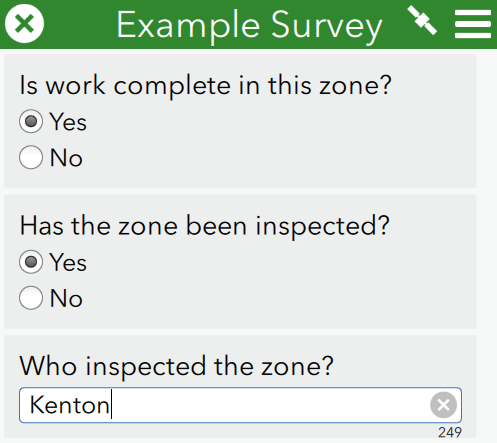

Continuing our scenario, we will make a survey that captures an ‘Yes’ or ‘No’ indicator for work being done in a zone. If the work is complete, we will ask if the work has been inspected. If the work has been inspected, who inspected it? Alternatively, if the work is not complete, what work is remaining?

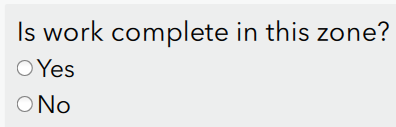

After publishing this survey, we handle the logistics of creating a group for this survey, sharing the form and feature layer to that group, and adding our field users to the group as members. We have users who have downloaded the survey on their mobile devices through the Survey123 app and have started collecting data. The logic is linear and straight-forward. The functionality of the ‘relevant’ field means the user opens the survey to one question and depending on the answer is prompted to answer the next question. The first and only question available looks like this.

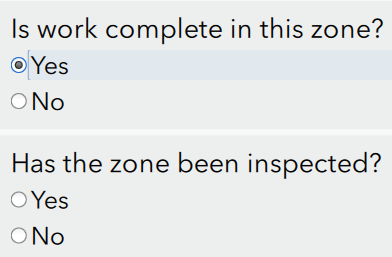

And after answering ‘Yes’ the next question appears, and so on.

By keeping irrelevant questions out of the survey for that collection point, we maximize end-user compliance and minimize erroneous data entry.

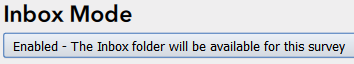

But after the survey get deployed and users have been submitting for a while, we get news. A field manager just reported that due to a recent decision, additional work will have to be done in half the zones that have already been inspected. While we had planned for the survey to be over and done after being submitted by the field worker, they will now have to reopen submitted surveys and change answers. To do this we will republish the survey after switching the Inbox Mode from ‘Disabled’ to ‘Enabled’ and publish.

After updating or re-downloading the survey the user gets an inbox with submitted surveys.

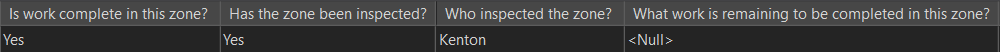

The survey is driven straight from the data in the survey table. You can see in the following images how straight-forward this mechanic is. Submission of a survey writes the input values to the table, which then get read back when the survey is re-opened to populate the existing survey.

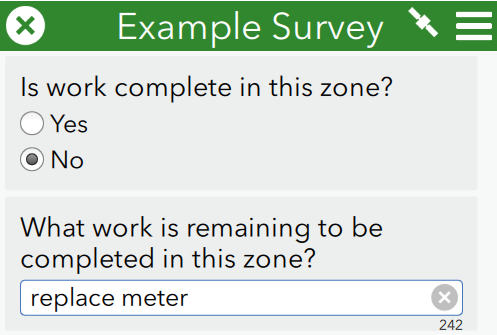

But the work is no longer complete in the field, so the user will naturally change the answers to the following.

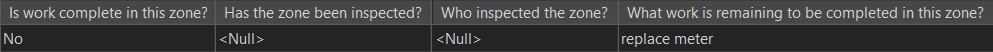

After submitting the survey lets look at what the record looks like in the data table.

Now we have a problem. It was critical to track who inspected the zone the first time around, but that attribute is now NULL because it became irrelevant when the answer to the first question became ‘No’. What if I need to answer the question, “Who inspected this zone before the work indicator was changed to ‘No’”? What if the meter gets replaced and the resulting inspection gets performed by someone else? How do I provide a list of all the inspectors who inspected work in the zone?

In a more complex survey, perhaps where the logic branches significantly, what happens when a question towards the top gets answered differently? Due to the relevant field conditions, this might cause the loss of dozens of questions collected during the initial survey when the next surveyor opens, answers, and submits that survey. Even toggling the Yes/No answer back and forth is enough to clear out the previous answers if the survey gets submitted. It is important to know if you need to retain this information before you start supporting the editing of existing surveys.

With thoughtful design and by employing available Survey123 tools, problems as described above can be handled and the business processes satisfied. When time is of the essence it is important to take a moment to anticipate these problems before they arise. The decision can then be made to sacrifice functionality for speedy execution without running into a roadblock and disrupting the productivity of survey efforts.

What do you think?