Starting with ArcGIS Pro 3.4, and now also 3.5, the conda environment that ships with ArcGIS Pro includes the pydantic python package. Pydantic has been my go-to data validation library for years, but to use it alongside ESRI’s python tools required cloning the arcgispro-py3 environment, and then installing pydantic into the cloned environment, so I’m very happy that it’s included out of the box now. Pydantic claims it is “the most widely used data validation library for Python” and I have no doubts as to the accuracy of that claim. It can quickly validate your data meets the expectations you set, and it produces excellent messages when the input is invalid. What’s more, the pydantic documentation is top notch. I’d like to share how I’ve been using pydantic successfully for a few years to validate data before I write it to an ESRI geodatabase and highlight the advantages of this pre-validation step over just attempting to write data to the geodatabase and handling any errors that arise.

No BaseModel here

The way I was first introduced to pydantic was thru the BaseModel. The base model allows you to build out a python class which you’ve defined to have certain fields which can contain data of a specified type and potentially lots of other constraints. If you’re familiar with core python’s dataclasses, a BaseModel is very similar, but has lots of extra goodies. BaseModels are great for building out a python class for data validation when you know the structure of what the data should be beforehand, but they aren’t designed to be created dynamically like you’d need to validate data before it is written to an arbitrary table/feature class in a geodatabase. I suppose you could go make a base model for each and every table/feature class in your geodatabase and then use those models to validate your data, and that would work, but it would be a lot of work, and any changes you made to your database would have to be made to your BaseModels as well, that smells of tech debt and a lot of opportunity for human error. Alas, there is a better way!

The TypeAdapter

To validate data before it’s written to a geodatabase I employ a TypeAdapter alongside an arcpy Describe object. I can use the describe object to:

- List the fields of the table/feature class

- Get the datatype of each of the fields

- Determine which fields are non-nullable

- Discover the allowed codes for fields that are controlled by a coded value domain

- Get the allowable range for fields controlled by a range domain

- Get the maximum length for each text field

- All of the above, for each subtype of a table/feature class if it is subtyped

With all that information gathered using arcpy we can programmatically feed it into the TypeAdapter and then use the TypeAdapter’s `validate_python`method to validate our data meets the expectations of our table/feature class before we insert it. I’ve written the code to create the TypeAdapter simply by calling the ssp_esri_pydantic.make_table_adapter() function with the path to the table or feature class in a geodatabase.

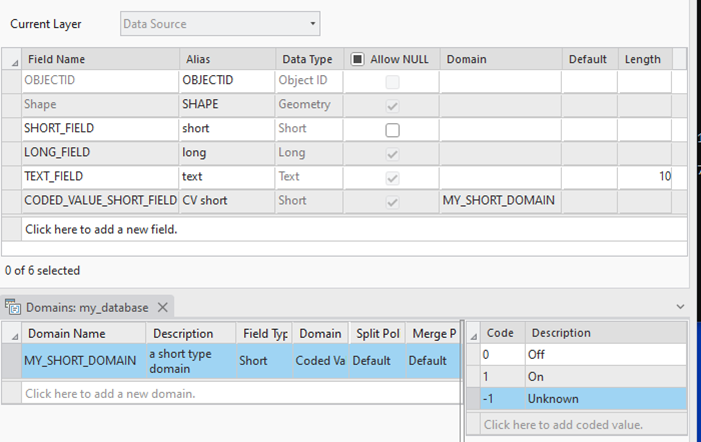

To demonstrate some of the results you can get from validating data with the type adapter, we’ll create a type adapter from the following table:

We have a few fields with different data types and one field that is controlled by a coded value domain.

In the following examples I’ll send a dictionary to a function I’ve written “test_ta_and_insert_data” which will take the dictionary it receives and pass it to the `validate_python` function of the type adapter and it will then send the same input data directly to an insert cursor. The calls to the validate_python and the insert cursor will be wrapped in a try/except block and nicely print out any exceptions that occur, and if no exception occurs, it will print out that it was successful.

For all the examples ‘my_point’ will simply be a point:

my_point = arcpy.Point(-90.18502, 38.62466)Playing along at home (or work…?!)

If you’d like to follow along, I’ve uploaded the python code and a notebook that accompanies this blog to GitHub. You can access the python code here, and notebook here.

Case 0: All values are good

These inputs are all valid inputs for our fields; this should be successfully validated by the type adapter and the insert cursor should successfully insert the row.

my_row = {

"SHORT_FIELD": 50,

"TEXT_FIELD": "good text",

"LONG_FIELD": 1_000_000,

"CODED_VALUE_SHORT_FIELD": 1,

"Shape": my_point,

}

test_ta_and_insert_data(my_row)

Input data:

SHORT_FIELD : 50

TEXT_FIELD : 'good text'

LONG_FIELD : 1000000

CODED_VALUE_SHORT_FIELD: 1

Shape : POINT(-90.18502 38.62466 NaN NaN)

validate_python success.

InsertCursor success.

Case 0 Result: As expected, good values are validated by the type adapter and the insert cursor was successful.

Case 1: Value too big for field type

A value of 50,000 is sent to a short integer field (which is greater than 32,767 (the maximum value for a short integer))

my_row = {

"SHORT_FIELD": 50_000,

"TEXT_FIELD": "some text",

"Shape": my_point,

}

test_ta_and_insert_data(my_row)

Input data:

SHORT_FIELD: 50000

TEXT_FIELD : 'some text'

Shape : POINT(-90.18502 38.62466 NaN NaN)

validate_python exception. Exception message:

ValidationError: 1 validation error for MY_FEATURECLASS

SHORT_FIELD

Input should be less than or equal to 32767 [type=less_than_equal, input_value=50000, input_type=int]

For further information visit https://errors.pydantic.dev/2.11/v/less_than_equal

InsertCursor exception. Exception message:

RuntimeError: The value type is incompatible with the field type. [SHORT_FIELD]

Case 1 Result: They both tell us that there is a problem with the SHORT_FIELD, but I find the type adapter’s error message more helpful. It tells me what the maximum value for the field is, as well as the value that was supplied to the field. The InsertCursor simply tells us that the value is incompatible, it doesn’t tell us any details about what was wrong with the value.

Case 2: Wrong datatype

A string is sent to the short integer field

my_row = {

"SHORT_FIELD":"should be an int",

"TEXT_FIELD": "some text",

"Shape":my_point,

}

test_ta_and_insert_data(my_row)

Input data:

SHORT_FIELD: 'should be an int'

TEXT_FIELD : 'some text'

Shape : POINT(-90.18502 38.62466 NaN NaN)

validate_python exception. Exception message:

ValidationError: 1 validation error for MY_FEATURECLASS

SHORT_FIELD

Input should be a valid integer, unable to parse string as an integer [type=int_parsing, input_value='should be an int', input_type=str]

For further information visit https://errors.pydantic.dev/2.11/v/int_parsing

InsertCursor exception. Exception message:

RuntimeError: The value type is incompatible with the field type. [SHORT_FIELD]

Case 2 Result: The type adapter tells us that we gave a string not an integer, and then tells us what that input string was, the InsertCursor tells us the same error as we got for passing an integer that was too big to the short integer field.

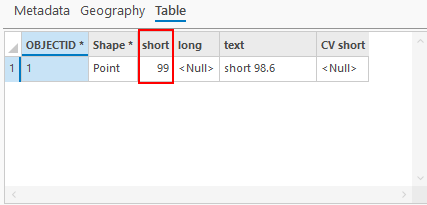

Case 3: Float to an int field

A float of 98.6 is sent to the short integer field.

my_row = {

"SHORT_FIELD": 98.6,

"TEXT_FIELD": "short 98.6",

"Shape":my_point,

}

test_ta_and_insert_data(my_row)

Input data:

SHORT_FIELD: 98.6

TEXT_FIELD : 'short 98.6'

Shape : POINT(-90.18502 38.62466 NaN NaN)

validate_python exception. Exception message:

ValidationError: 1 validation error for MY_FEATURECLASS

SHORT_FIELD

Input should be a valid integer, got a number with a fractional part [type=int_from_float, input_value=98.6, input_type=float]

For further information visit https://errors.pydantic.dev/2.11/v/int_from_float

InsertCursor success.

Case 3 Result: The type adapter once again gives us a clear error message, that we can’t have a fractional value for an integer. You can come to your own conclusions if the insert cursor being successful and inserting 99 instead of 98.6 is good/bad or indifferent, but I’m firmly in the camp that says the database should be giving me an error and it should not chang the value I sent without telling me.

Case 4: Text too long

A string is sent to the text field, but the string is too long to fit into the field because the text field has a length of 10 and the input string is longer than 10.

my_row = {

"SHORT_FIELD": 20,

"TEXT_FIELD": "a text string that's too big for the field ",

"Shape":my_point,

}

test_ta_and_insert_data(my_row)

Input data:

SHORT_FIELD: 20

TEXT_FIELD : 'a text string that's too big for the field '

Shape : POINT(-90.18502 38.62466 NaN NaN)

validate_python exception. Exception message:

ValidationError: 1 validation error for MY_FEATURECLASS

TEXT_FIELD

String should have at most 10 characters [type=string_too_long, input_value="a text string that's too big for the field ", input_type=str]

For further information visit https://errors.pydantic.dev/2.11/v/string_too_long

InsertCursor exception. Exception message:

RuntimeError: The row contains a bad value. [Field length exceeded. Field: TEXT_FIELD. Value: a text string that's too big for the field ]

Case 4 Result: Both error are very similar in this case, but the type adapter telling us the actual value that was bad is nice to have. Also note in the type adapter error, that the input value is surrounded in quotes, this is incredibly helpful to find issues with text values that look good, but have trailing whitespace at the end of the string, and that trailing white space is what is causing the string to be too long.

Case 5: Multiple errors

Sometimes input data has more than one issue (gasp), lets see what happens if a value that’s too large for a short int, and a text value that’s too long for the field are sent for one feature.

my_row = {

"SHORT_FIELD": 99999,

"TEXT_FIELD": "a text string that's too big for the field",

"Shape":my_point,

}

test_ta_and_insert_data(my_row)

Input data:

SHORT_FIELD: 99999

TEXT_FIELD : 'a text string that's too big for the field'

Shape : POINT(-90.18502 38.62466 NaN NaN)

validate_python exception. Exception message:

ValidationError: 2 validation errors for MY_FEATURECLASS

SHORT_FIELD

Input should be less than or equal to 32767 [type=less_than_equal, input_value=99999, input_type=int]

For further information visit https://errors.pydantic.dev/2.11/v/less_than_equal

TEXT_FIELD

String should have at most 10 characters [type=string_too_long, input_value="a text string that's too big for the field", input_type=str]

For further information visit https://errors.pydantic.dev/2.11/v/string_too_long

InsertCursor exception. Exception message:

RuntimeError: The value type is incompatible with the field type. [SHORT_FIELD]

Case 5 Result: The type adapter tells us that we have two issues with the data. The insert cursor finds the first error and stops. If we went back and corrected the error identified by the insert cursor and tried again, we’d get hit by a different error. I personally like the way this works in the type adapter because it makes it feel a lot less like I’m playing a game of whack a mole. When the insert cursor gives you only one error at a time it’s like playing whack a mole, you fix one error and then another pops up!

Note: Pydantic does have a failfast mode that would stop validation at the first error, which is great for some situations, but for this use case, having all the errors is very beneficial, so failfast is not enabled in our type adapter.

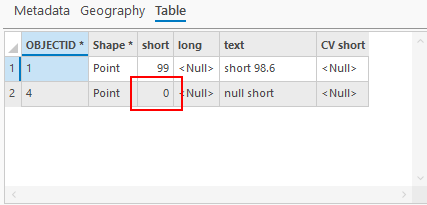

Case 6: Null for a non-nullable field

No value is provided to the SHORT_FIELD, which is non-nullable in the feature class.

my_row = {

"TEXT_FIELD": "null short",

"Shape":my_point,

}

test_ta_and_insert_data(my_row)

Input data:

TEXT_FIELD: 'null short'

Shape : POINT(-90.18502 38.62466 NaN NaN)

validate_python exception. Exception message:

ValidationError: 1 validation error for MY_FEATURECLASS

SHORT_FIELD

Field required [type=missing, input_value={'TEXT_FIELD': 'null shor...18502, 38.62466, #, #)>}, input_type=dict]

For further information visit https://errors.pydantic.dev/2.11/v/missing

InsertCursor success.

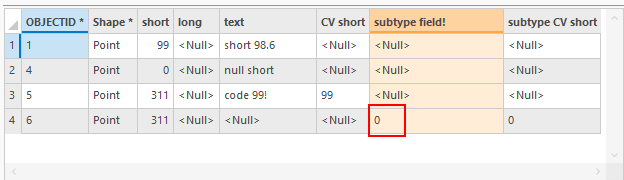

Case 6 Result: The type adapter fails and says that the short field is required. The insert cursor returns success, if we look in the database our short int field has a 0 populated.

Similar to case 3, I’ll leave you to come to your own conclusions, but I’d prefer to know that I wasn’t providing a value to a non-nullable field and that the database was going to populate it with a value, as opposed to it populating a value for me and not even telling me that it was doing so.

Note: Pydantic says that the field is required. This is different than a field being ‘required’ in the ESRI ecosystem. The ‘required’ property of a field in the ESRI world means that the field cannot be deleted from the table/feature class, it has absolutely nothing to do with the values in the field. What pydantic calls ‘required’ is analagous to the ESRI ‘isNullable’ field property, which means that the field must have a value for each row in the table/feature class.

Case 7: Extra field provided

Add an extra field “NON_EXISTENT_FIELD” to our input data .

my_row = {

"SHORT_FIELD": 311,

"TEXT_FIELD": "a string!",

"NON_EXISTENT_FIELD": 10,

"Shape":my_point,

}

test_ta_and_insert_data(my_row)

Input data:

SHORT_FIELD : 311

TEXT_FIELD : 'a string!'

NON_EXISTENT_FIELD: 10

Shape : POINT(-90.18502 38.62466 NaN NaN)

validate_python exception. Exception message:

ValidationError: 1 validation error for MY_FEATURECLASS

NON_EXISTENT_FIELD

Extra inputs are not permitted [type=extra_forbidden, input_value=10, input_type=int]

For further information visit https://errors.pydantic.dev/2.11/v/extra_forbidden

InsertCursor exception. Exception message:

RuntimeError: Cannot find field 'NON_EXISTENT_FIELD'

Case 7 Result: Both errors are similar and clearly convey the issue, but I think for our case of inserting the data into our database, the InsertCursor’s error message is a little more clear. Remember pydantic is a generic data validation library, so its error messages aren’t made soley for errors that may arise when inserting data into a database.

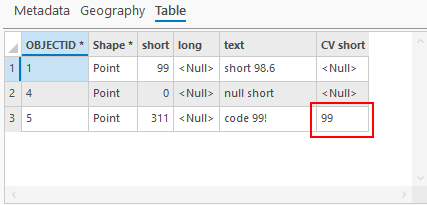

Case 8: Value not in CV domain

Send the value 99 to a field controlled by a coded value domain and 99 isn’t a code in the domain assigned to the field.

my_row = {

"SHORT_FIELD": 311,

"TEXT_FIELD": "code 99!",

"CODED_VALUE_SHORT_FIELD": 99,

"Shape":my_point,

}

test_ta_and_insert_data(my_row)

Input data:

SHORT_FIELD : 311

TEXT_FIELD : 'code 99!'

CODED_VALUE_SHORT_FIELD: 99

Shape : POINT(-90.18502 38.62466 NaN NaN)

validate_python exception. Exception message:

ValidationError: 1 validation error for MY_FEATURECLASS

CODED_VALUE_SHORT_FIELD

Input should be 0, 1 or -1 [type=literal_error, input_value=99, input_type=int]

For further information visit https://errors.pydantic.dev/2.11/v/literal_error

InsertCursor success.

Case 8 Result: The type adapter rejected our row since 99 isn’t a value in the domain, but the insert cursor let it go into the database without issue. I now have a value in my database that doesn’t conform to the domain assigned to the field. This is another case where you should come to your own conclusion, but in my opinion, if we went to the trouble of setting up a coded value domain and assigned it to our field, I’d like to ensure that all my data in the field conforms to the expected values.

Case 9: No value supplied for subtype field

Send a row that is missing a value for the subtype field of a table/feature class

Note: Our feature class was not subtyped originally, we will add some new fields and set one of them as the subtype field for the feature class and add a coded value domain to subtype 1 for the other field.

Since we’ve modified the schema of our feature class we will need to re-create our table adapter to get the table adapter to know about the new fields.

print("Adding fields to feature class for subtype examples.")

arcpy.AddField_management(my_fc_path, "SUBTYPE_FIELD", "SHORT", field_alias="subtype field!")

arcpy.AddField_management(my_fc_path, "SUBTYPE_CV_SHORT_FIELD", "SHORT", field_alias="subtype CV short")

print("Setting subtype field and adding subtypes.")

arcpy.SetSubtypeField_management(my_fc_path, "SUBTYPE_FIELD")

arcpy.AddSubtype_management(my_fc_path, 1, "subtype 1")

arcpy.AddSubtype_management(my_fc_path, 2, "subtype 2")

print("Adding the 'MY_SHORT_DOMAIN' domain only to subtype 1 of the 'SUBTYPE_CV_SHORT_FIELD' field.")

arcpy.AssignDomainToField_management(

my_fc_path,

"SUBTYPE_CV_SHORT_FIELD",

"MY_SHORT_DOMAIN",

1,

)

print("Recreating table adapter.")

my_ta = make_table_adapter(my_fc_path)

my_row = {

"SHORT_FIELD": 311,

"SUBTYPE_CV_SHORT_FIELD": 0,

"Shape":my_point,

}

test_ta_and_insert_data(my_row)

Adding fields to feature class for subtype examples.

Setting subtype field and adding subtypes.

Adding the 'MY_SHORT_DOMAIN' domain only to subtype 1 of the 'SUBTYPE_CV_SHORT_FIELD' field.

Recreating table adapter.

Input data:

SHORT_FIELD : 311

SUBTYPE_CV_SHORT_FIELD: 0

Shape : POINT(-90.18502 38.62466 NaN NaN)

validate_python exception. Exception message:

ValidationError: 1 validation error for tagged-union[MY_FEATURECLASS-subtype 1,MY_FEATURECLASS-subtype 2]

Unable to extract tag using discriminator 'SUBTYPE_FIELD' [type=union_tag_not_found, input_value={'SHORT_FIELD': 311, 'SUB...18502, 38.62466, #, #)>}, input_type=dict]

For further information visit https://errors.pydantic.dev/2.11/v/union_tag_not_found

InsertCursor success.

Case 9 Result: The type adapter rejected our row since we didn’t supply a value for the SUBTYPE_FIELD. Of all the error messages produced by the type adapter, this one is the least intuitive to me. This is because the type adapter isn’t sure what it should be validating the data against. Without a subtype code, the table adapter can’t verify that we’re following the rules for whatever subtype the data should be. Under the covers, the make_table_adapter actually made a different pydantic TypeAdapter for each subtype in our table/feature class, and it combines all the TypeAdapters into one all encompassing adapter. then it uses whatever value is supplied to ‘discriminate’ which subtype’s TypeAdapter to validate against. since we didn’t give it a code, it couldn’t determine which to use.

The insert cursor on the other hand happily inserts a new row into the table and if we look at the table, we now have a value that says it is subtype 0. Once again, it’s up to you if this is what you’d like to happen, but I would never want to see a row in my database with a subtype value that isn’t a subtype for my table / feature class.

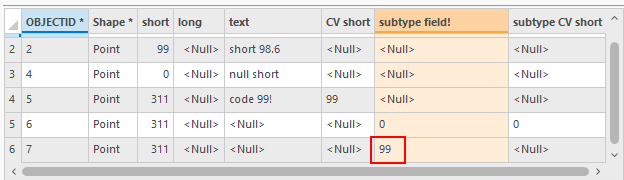

Case 10: Bad subtype code

Send a row that has a value for the subtype field which is not a code for the subtype of the table/feature class

my_row = {

"SHORT_FIELD": 311,

"SUBTYPE_FIELD": 99,

"Shape":my_point,

}

test_ta_and_insert_data(my_row)

Input data:

SHORT_FIELD : 311

SUBTYPE_FIELD: 99

Shape : POINT(-90.18502 38.62466 NaN NaN)

validate_python exception. Exception message:

ValidationError: 1 validation error for tagged-union[MY_FEATURECLASS-subtype 1,MY_FEATURECLASS-subtype 2]

Input tag '99' found using 'SUBTYPE_FIELD' does not match any of the expected tags: 1, 2 [type=union_tag_invalid, input_value={'SHORT_FIELD': 311, 'SUB...18502, 38.62466, #, #)>}, input_type=dict]

For further information visit https://errors.pydantic.dev/2.11/v/union_tag_invalid

InsertCursor success.

Case 10 Result: The type adapter rejected our row since the input ‘tag’ 99 does not match the expected tags [1,2]. This is another where the ESRI/pydantic words are simply different. What pydantic is referring to here as a ‘tag’ is the same as what in the ESRI world we call a subtype.

You know where this is going… The insert cursor inserts a new row into the table and if we look at the table, we now have a value that says it is subtype 99. I wouldn’t want that in my database.

Case 11: Value for field with domain assigned to field at the subtype level isn’t in the domain

Send a row that has a value for a field that has a domain assigned to the field at the subtype level, the domain is not the entire table, just the subtype we’re targeting.

my_row = {

"SHORT_FIELD": 311,

"SUBTYPE_FIELD": 1,

"SUBTYPE_CV_SHORT_FIELD":2,

"Shape":my_point,

}

test_ta_and_insert_data(my_row)

Input data:

SHORT_FIELD : 311

SUBTYPE_FIELD : 1

SUBTYPE_CV_SHORT_FIELD: 2

Shape : POINT(-90.18502 38.62466 NaN NaN)

validate_python exception. Exception message:

ValidationError: 1 validation error for tagged-union[MY_FEATURECLASS-subtype 1,MY_FEATURECLASS-subtype 2]

1.SUBTYPE_CV_SHORT_FIELD

Input should be 0, 1 or -1 [type=literal_error, input_value=2, input_type=int]

For further information visit https://errors.pydantic.dev/2.11/v/literal_error

InsertCursor success.

Case 11 Result: The type adapter rejected our row since the input value for the SUBTYPE_CV_SHORT_FIELD of 2 was not 0, 1, or -1.

Again, the insert cursor wrote the out of domain value to the database.

Case 12: Value for field with domain assigned to a different subtype, but no domain assigned to the input row’s subtype

This is the same as case 11, but instead of writing to subtype 1 we’ll write to subtype 2, but subtype 2 doesn’t have a domain assigned to the SUBTYPE_CV_SHORT_FIELD.

my_row = {

"SHORT_FIELD": 311,

"SUBTYPE_FIELD": 2,

"SUBTYPE_CV_SHORT_FIELD":2,

"Shape":my_point,

}

test_ta_and_insert_data(my_row)

Input data:

SHORT_FIELD : 311

SUBTYPE_FIELD : 2

SUBTYPE_CV_SHORT_FIELD: 2

Shape : POINT(-90.18502 38.62466 NaN NaN)

validate_python success.

InsertCursor success.

Case 12 Result: The type adapter successfully validated our data. The value that it rejected as wrong in case 11 is the same, but since subtype 2 doesn’t have a domain assigned to the field for this subtype, the value we’re writing to the field is allowed

The insert cursor wrote the row to the database successfully as it should have.

What the make_table_adapter() table adapter does NOT do

While the table adapter that results from the make_table_adapter function does a lot, it does not take into account issues that may arise from custom validation attribute rules and does not take into account anything that may be populated by calculation attribute rule. Similarly, the table adapter does not do anything that may be limited by contingent values which may be setup in the database. In the case that a field is a key for a relationship class the table adapter will not do any sort of extra key validation on the value. Lastly there is nothing in here validating anything about the geometry other than check that a value is provided for the geometry for a feature in a feature class. You could pass a string to the geometry field of the feature class and the table adapter would not have an issue (clearly when you try to insert a string as the geometry into your database it will fail)

What’s holding you back?!

There’s a good chance that using this method to validate data before you insert it will catch something bad before it hits your database, and as you’ve seen the error messaging from pydantic is consistent and typically very easy to understand. Then the ability to catch more than one thing wrong on a single record with one call to validate_python, that’s huge if you’re working with some messy data that someone asked you to add to the database! Hopefully you found this helpful, and happy inserting!

What do you think?