Esri’s ArcGIS for Pipeline Referencing Tools have been officially in the market for nearly four years, and many operators are still considering the transition to this new data maintenance world. Numerous factors are a part of this decision such as database schemas, cost, well-defined internal workflows, legacy systems, data collection workflows, and integrations. Other times it comes down to third party software, external or internal custom tools that would not be able to transition to new data models which usually means a complete rewrite or expensive upgrade. This is not always plausible since they have no tie to, nor stake in these new models to account for the costs needed to rewrite these tools.

This should not have to be a reason not to want to take advantage of the APR tools. The advantages of an updated data model, especially if it reduces costs in other departments, provides more ownership and ways to visualize assets both in the office and in the field.

This article is focused on, how can you still make that transition and keep using the legacy solutions that worked on the old data model. At least for now, until the entire enterprise solution is upgraded to match the new Esri version and schema.

SSP Innovations embarked on this type of deployment for a client of ours. In early 2019, we were contracted to help them migrate their data from the PODS 6 data model to the PODS Next Generation data model. This was prior to the official PODS 7 release. SSP Innovations has done several migrations for PODS, and this was no different, except they still had a vendor’s software that would need the previous data model to consume and make informed decisions from. This was a software that was critical to their Integrity group and was in the middle of their contract lifecycle, so upgrading or choosing another vendor was not an option. They did not want this to stall their transition, as we all know lining up contracts and finding that “right” time is almost impossible.

With SSP Innovations knowledge of the previous PODS data model, and the new PODS data model, it was scoped that we would write a nightly batch job, that would take the data utilized by the Integrity software, and programmatically migrate it to their old PODS data model. Essentially, a “reverse migration.” It seems easy, right? Well, in essence, yes, but the intricacies of the way the previous PODS model handled linear referencing, measures on the pipelines, and the measures of the events, required some finessing to get all the calculations and specific tables written to correctly. When migrating to the new model, there are some manual steps to get there, however, with this process it had to be fully automated, since updates had to be migrated nightly for the Integrity software to be used on a daily basis with the most current data available.

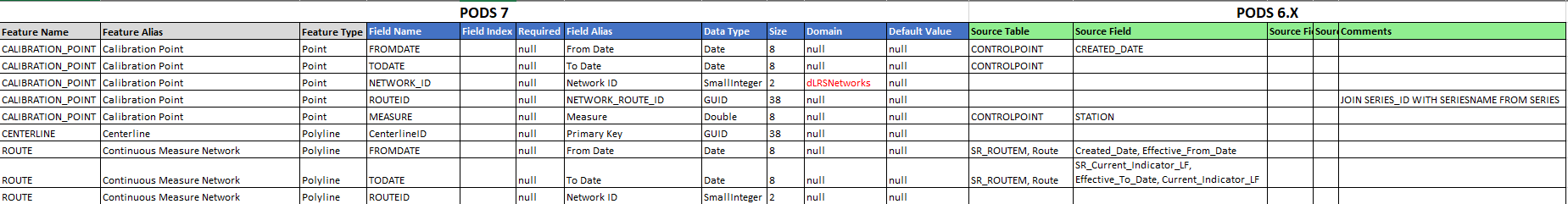

First, a mapping document was created to illustrate where data is in the new model, and where it needed to go in the previous model. Much like a mapping migration document we did for migrating to the new PODS model. However, since this was automated, we had to ensure we had the correct filters and queries to assist in the development of the scripts.

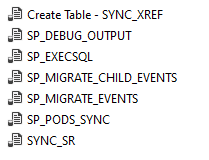

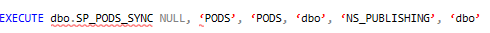

The nightly batch job was broken into several scripts to accommodate the individual building blocks needed to populate the previous data model. There is one execution script that can be run on one, multiple or all pipelines.

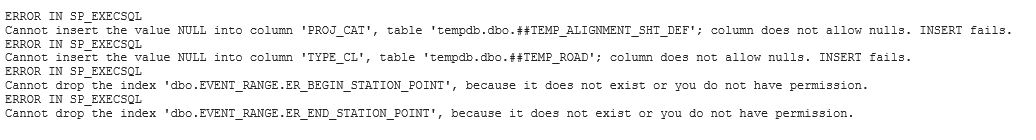

Thorough QA/QC was performed by both SSP and the client on all data to ensure the integrity of data migrated would not compromise downstream analytics. Robust error-handling accommodated an array of technical feedback that could easily be consumed by the client developers. One of many examples of a QA/QC approach was that the client had custom views on their integrity database, so using those to test that all the data the integrity software uses is populated was a comprehensive method to ensure the data was migrated fully.

The culmination of this effort and vigorous testing is that the script takes about 30 minutes to run nightly, with data being replaced each night in the integrity database for use with the Integrity software the next day.

The example provided was for an upgrade from one version of the PODS model to a new version of the PODS model; however, this same methodology could be applied to any migration from one previous model to another in the gas industry. With SSP resources having extensive knowledge of the data models, legacy and future state, the process and methodologies to migrate back and forth from those data models, SSP has the expertise to support any operator considering this path forward, and overcome the challenges and obstacles that this article has illustrated.

So whether this is a long-term solution or an interim solution to a common problem, there is a path forward. Especially in the utility world with ever-changing data models, it means all parties, including the editor, the viewers, integrators, and consumers of that data, can all use their tools and software, whether new or existing, without too much interruption or downtime.

What do you think?